Software Development Kit

- DXNN®-

Bring your own AI model™

-

Just drop in your AI code and play your algorithms.

Any deep learning model and any deep learning framework end-to-end with ease.

Harness the power of DEEPX's NPU today and elevate your deep learning experience to the next level.

DXNN® - DEEPX NPU Software (SDK)

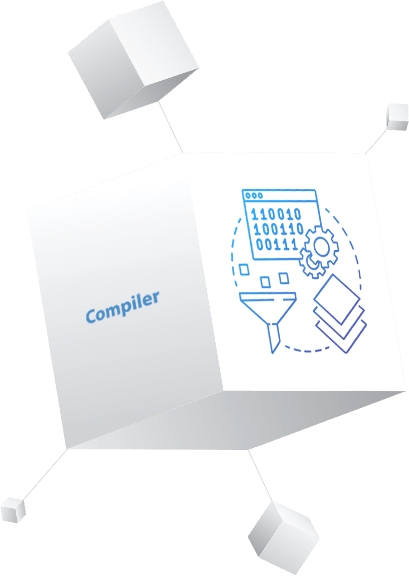

DEEPX's DXNN software framework is an all-in-one solution that streamlines the deployment of deep learning models into DEEPX's AI SoCs. DXNN comprises two essential components: the NPU compiler, DX-COM, and the NPU runtime system software, DX-RT. DX-COM delivers the tools necessary for high-performance quantization, model optimization, and NPU inference compilation. Meanwhile, DX-RT includes the NPU device driver, runtime with APIs, and NPU firmware. With DXNN, you can easily and efficiently deploy deep learning models, unlocking the full potential of DEEPX's AI SoCs.

DXNN® Key features

FOR DNN MODELS

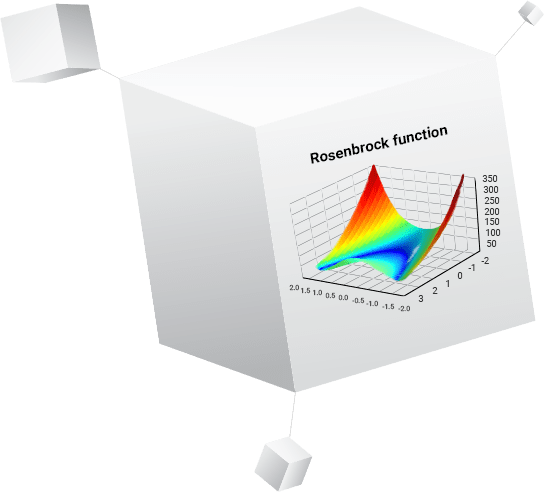

PERFORMING QUANTIZER

MODEL INFERENCE PROCESS

AND RUNTIME